A/B Testing for Websites

A/B testing isn’t just a trendy term thrown around in the world of affiliate marketing; it’s a critical tool that can reveal the path to better conversion rates and higher earnings. At its core, A/B testing is about comparison and choice—the A option versus the B option—but the implications of this simple concept can be profound for your marketing strategy.

It might be tempting to think that the success of these tests hinges on the tool you use, but that’s not exactly the case. Sure, using a sophisticated tool can make the process smoother, but what’s more important is how you design your experiments. You could have access to the most advanced testing tools out there, but without asking the right questions, you won’t uncover the insights you need to succeed.

So what should you be testing? If you’re aiming to boost those affiliate link clicks, your focus should be razor-sharp. Changing one element at a time—whether it’s your offer’s copy, the call-to-action (CTA) placement or design, or the images you choose to showcase—can make a clear impact. You’ll be able to pinpoint exactly what resonates with your audience instead of being left in the dark, wondering why one entire page outperformed another.

This concentrated approach not only provides more precise results, but it also builds a foundation of knowledge about your audience’s preferences. Over time, with each focused test, a picture emerges: a portrait of your optimal offers, copy, and promotional strategies fine-tuned through data-driven decision-making.

Honing In on Critical Testing Elements

A/B testing can be a game-changer for affiliate marketers, but the true power lies not in the testing itself, but in the fine details of how each test is structured. Consider the example of an affiliate marketer who decides to test two different landing pages. Each page has a drastically different layout, and color scheme, and even uses different promotional language. Intuition might suggest that this will provide clear answers on what works best; however, when the pages produce different results, pinpointing the winning elements becomes an impossible task. It’s akin to comparing apples to oranges.

This is why focusing on isolated elements within your A/B tests is essential. When you tweak one aspect at a time, such as the persuasive power of your copy, the position of your call-to-action (CTA) button, its visual appeal, or even the type of images you use to showcase products, the subsequent data becomes significantly more meaningful.

Say you’ve altered the CTA’s (Call all to action) color and notice a surge in clicks. This indicates that the color has a direct impact on user engagement. On the other hand, if conversion rates don’t budge when you switch up the copy, you’ll know it’s not a contributing factor. This level of specificity brings forth actionable insights, allowing you to refine your approach methodically.

Evidence from numerous case studies has consistently shown that focusing on a singular change leads to more accurate results. Moreover, these focused tests create a ripple effect, granting you the ability to compile a profile on what your particular audience responds to most favorably. This knowledge equips you with the power to tailor your campaigns in ways that resonate better and drive more meaningful clicks on your affiliate links.

Remember, the goal here is not just to ascertain which version ‘wins’ but to understand WHY it wins. As an affiliate, you need precise answers, because they translate into optimized promotions and, ultimately, increased revenue. It’s about being SMART with your testing tactics—Simple Measures of A/B Research Technique—that align with your objective of enhancing click-through rates and conversions.

Analyzing the Importance of Sufficient Data in A/B Testing

Have you been tempted to end an A/B test early because one variant seemed to lead by a mile? I have been there, but I’ve learned that jumping to conclusions can do more harm than good. Why’s that? Let me explain.

The truth is, validity in A/B testing hinges on one crucial factor: sample size. A common scenario is when a small number of users shows a strong lean toward one variant, prompting an immediate — yet often misleading — verdict. What’s missing? A significant chunk of data that could tell a different story.

I remember my first brush with early test termination. I saw a 20% vs. 5% conversion rate difference between two variants with just 50 visits. It looked like a clear cut win, but I decided to wait — a lesson from a mentor’s advice. Sure enough, at 500 visits, the gap had shrunk considerably. By 1,000 visits, it was almost non-existent.

In A/B testing, your patience will inevitably be tested, especially if your site isn’t a high-traffic juggernaut. Patience isn’t just a virtue here; it’s a necessity. Waiting for at least 1,000 users to participate in your test isn’t a suggestion — it’s a standard. It ensures the statistical significance of your results, so you KNOW, rather than guess, which variant is the true performer.

Don’t misunderstand; getting excited about promising early trends is natural. Yet, the beauty of data is in its eventual certainty, not its enticing beginnings. I encourage smaller sites to embrace A/B testing, even if it means letting a test run for a lengthier period. It’s worth it when you refine your approach based on solid evidence, not gut feel or inadequate sample sizes.

As we turn toward the next section, remember that this patience is an investment in your affiliate site’s future. The insights gleaned from robust data will empower you to optimize systematically and with confidence. STICK WITH IT, and the rewards will come with compounded interest, helping you maximize your affiliate success.

Maximizing A/B Test Outcomes for Affiliate Success

Optimizing your affiliate marketing strategy hinges on interpreting A/B test results accurately and implementing changes decisively. It’s not just about knowing which variation garnered more clicks or conversions but understanding WHY it performed better. By analyzing the test outcomes thoroughly, you can align these insights with your overall affiliate marketing objectives.

Now that you’ve identified the successful elements through rigorous testing, it’s time to build upon these findings. Implement the winning features across other offers or pages and monitor the performance closely. This strategic approach to incremental changes ensures that you’re consistently moving toward peak optimization without upheaval.

Case studies in affiliate marketing often highlight the power of gradual, data-driven optimization. Affiliates who consistently apply lessons learned from A/B testing see a compound effect on their conversion rates and, ultimately, their earnings. They don’t just change a CTA button and hope for the best; they adapt their entire strategy based on the narrative the data tells.

Your commitment to continual learning and adaptation through A/B testing paves the way for sustainable growth. As you refine your offers, develop more compelling copy, and enhance user experience, the traffic to your affiliate links will naturally increase. This perpetual cycle of testing, learning, and optimizing is the cornerstone of a resilient affiliate marketing strategy.

In conclusion, while the tools for A/B testing are valuable, they’re mere instruments to the larger strategy of crafting compelling offers and intuitive experiences for your audience. Embrace the discipline of running these experimental narratives, and you’ll unlock a deeper understanding of your audience’s preferences. More importantly, through practical application and patience, your affiliate marketing efforts will become more effective and profitable over time.

How To Make an A/B Test

By now, you’re probably wondering how to perform A/B testing so you can improve your conversion rate optimization. To give you a helping hand, we’ve outlined how to perform A/B testing in five easy steps to optimize any ad, landing page, or email.

1. Determine the goal of your test

First things first, you need to outline your business goals. This will give you a solid hypothesis for A/B testing and help you to stay on track throughout the process.

Not to mention, it helps the overall success of the company. By clearly outlining the goals for your A/B testing, you can be sure that your efforts contribute to the growth and success of the business.

So how do you figure out what your goals should be? The answer is simple.

Ask yourself what you want to learn from the A/B test.

Do you want to increase social media engagement? Improve your website conversion rate? Increase your email open rates? The answer to these questions will tell you what your goals should be.

But whatever you do, don’t jump in and start testing random button sizes and colors. Your tests need to have a purpose to make them worthwhile.

2. Identify a variable to test

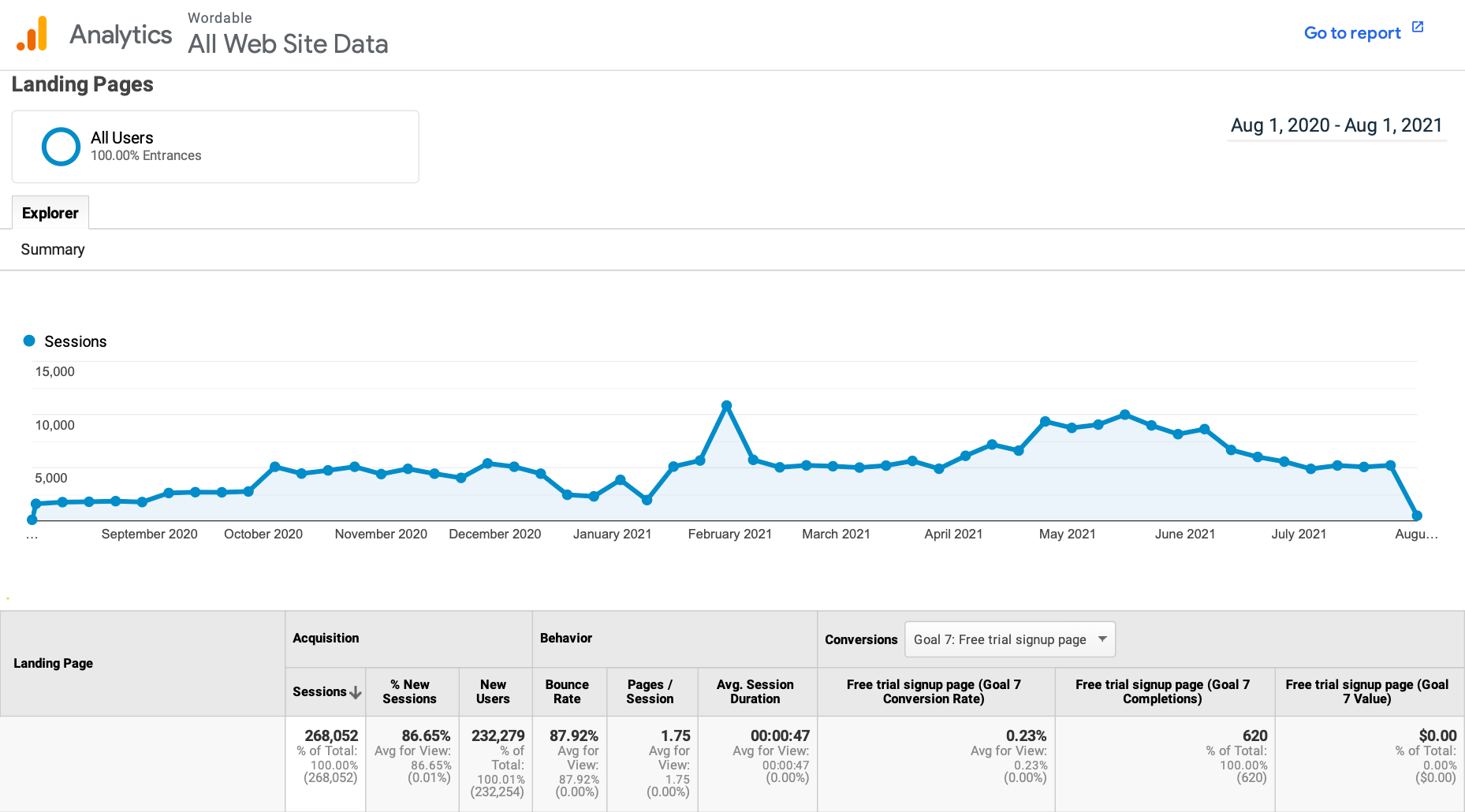

You’ve outlined your goals. Now you need to find the right variable to test, which is where data comes in handy. Using past data and analytics, you can identify your underperforming areas and where you need to focus your marketing efforts.

For example, let’s say your goal is to improve the user experience on your website.

To find the right variable, you review Google Analytics to find the pages with the highest bounce rate.

Once you’ve narrowed down your search, you can compare these pages with your most successful landing pages. Is there anything different between them?If the answer is yes, this is your variable for testing.

You could also usemultivariate testingto test more than one variable. It could be something as simple as a headline, a header image, or the wording on your CTA. This is also your hypothesis: “If we change [X thing] we will increase [goal].” Now you just have to prove yourself right.

3. Use the right testing tool

To make the most of your A/B test, you need to use the right testing program.

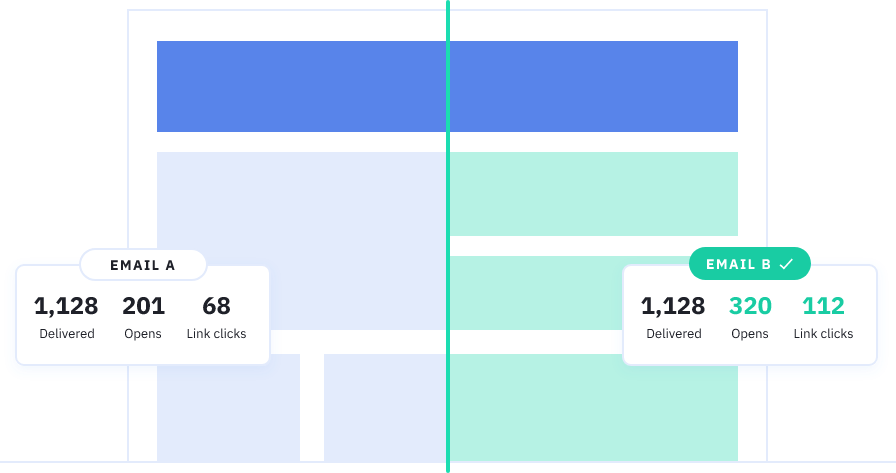

If you want to split-test your emails, a platform like ActiveCampaign is the right choice. Our software is equipped for email testing. You can track your campaigns, automate your split tests, and easily review the results.

However, not all software is as user-friendly and intuitive as ActiveCampaign.

If you make the wrong choice, you’re stuck using a platform that restricts your testing capabilities. As a result, your A/B tests could suffer, leaving you with unreliable results.

So make sure you find the testing program that’s ideally suited to your A/B test. This makes the entire process more efficient, easier to manage, and it’ll help you to make the most out of your testing.

4. Set up your test

Using whatever platform you’ve chosen, it’s time to get things up and running. Unfortunately, we can’t give you a step-by-step guide to set up your test because every platform is different.

But we’ll advise you to run your A/B tests with a single traffic source (rather than mixing traffic, for example).

Why? Because the results will be more accurate.

You need to compare like for like, making sure to segment your results by traffic source will ensure you review your results with as much clarity as possible.

5. Track and measure the results

Throughout the test duration, you need to continually track the performance. This will allow you to make any changes if the test isn’t running to plan. And when the test is over, you can measure the results to find the winning variation and review the successes and failures.

At this stage, you can figure out changes you need to make to improve the customer experience. But if there’s little to no difference between your tests (less than a %), you might need to keep it running.

Why?

Because you need a bigger dataset to draw conclusions.

This is where statistical significance comes in handy.

What is statistical significance?

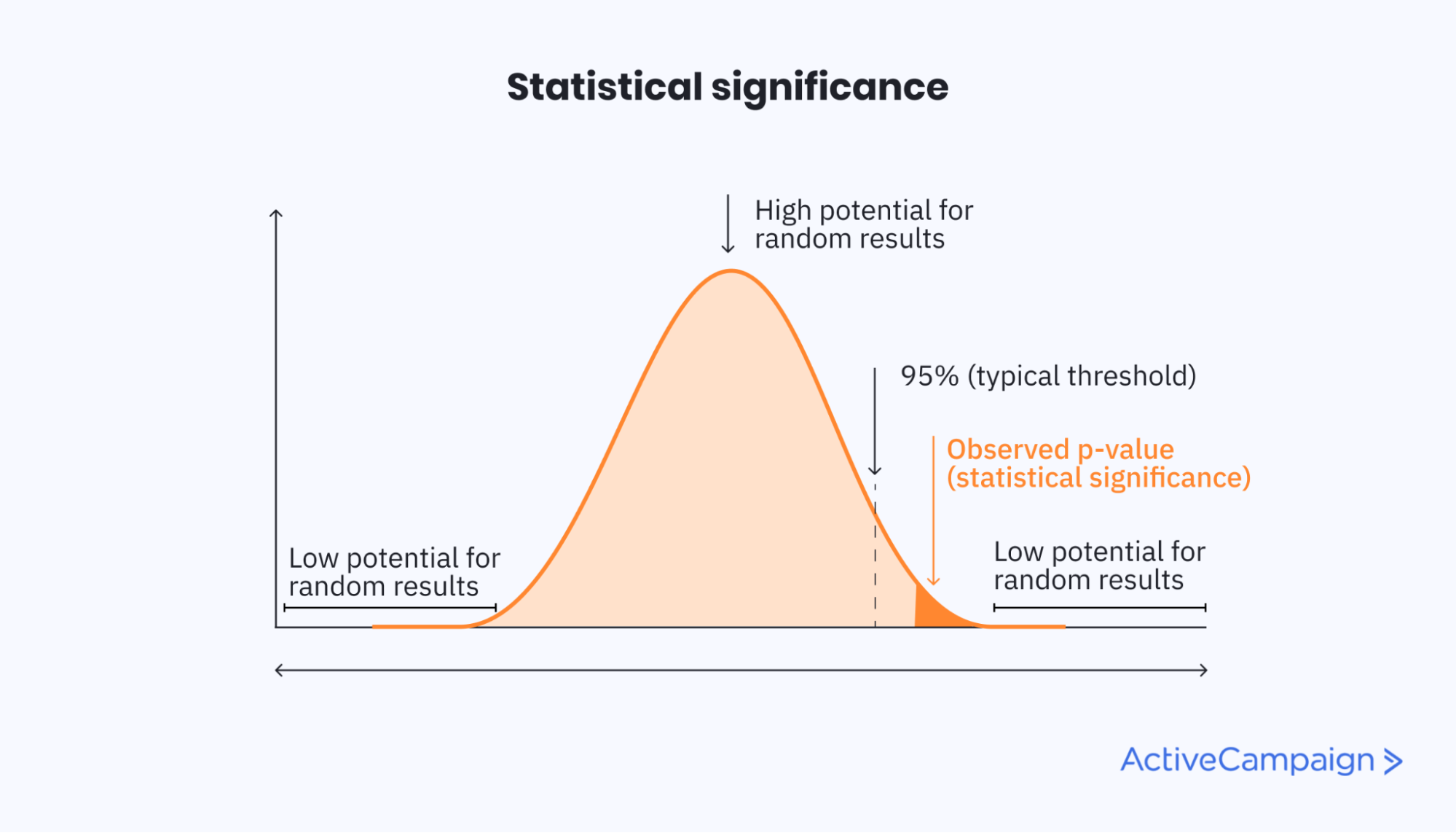

Statistical significance is used to confirm that results from testing don’t occur randomly. It’s a way of mathematically proving that a particular statistic is reliable. In other words, an A/B test has statistical significance if it isn’t caused by chance.

Here’s an overview of statistical analysis in more detail.

And here’s a breakdown of the elements of statistical significance in more detail:

The P-value: This is the probability value. If there’s a small probability that the results occurred by chance, the statistic is reliable. In other words, the smaller the P-value, the more reliable the results (0.05 is standard for confirming statistical significance).

Sample size: How big is the dataset? If it’s too small, the results may not be reliable.

Confidence level: This is the amount of confidence you have that the test result didn’t occur by chance. The typical confidence level for statistical significance is 95%.

Let’s use an example to put it into context. Imagine you run an A/B test on your landing page. On your current landing page, your CTA button is red. On the testing page, it’s blue. After 1,000 website visits, you get 10 sales from the red button, and 11 sales from the blue button.

Because these results are so similar, there’s a high chance the change of color didn’t make any difference.

This means that it’s not statistically significant.

But if the same test returned 10 sales from the red button and 261 sales from the blue button, it’s unlikely this occurred by chance.

This means it’s statistically significant.

If you struggle to identify whether your results are statistically significant, there are platforms out there that can help.

Metrics to track

There are a few key metrics you can track in order to reach your goal (whether that be a conversion goal or overall engagement).

Higher conversion rate

When testing different variations, one of the most common metrics to track is your conversion rate.

As you experiment with different content types, header images, CTA anchor text, or subject lines, you’ll see a varying number of recipients who click through to navigate to your website. This level of A/B testing can affect how many people engage with your business, ultimately resulting in more prospects turning into qualified leads.

Boost in website traffic

Website traffic is another metric that’ll benefit from A/B testing.

To see a good boost in traffic, you can test different landing page headers, blog featured images, or blog titles. This will help you to find the best way to format and word your content so you catch more of your audience’s attention.

And more audience attention means more chances for them to sign up for a trial, demo, and ultimately convert.

Lower bounce rate

If you’re seeing a low bounce rate on your website, it may be time to A/B test a few things.

You can try out different page formats, header sizes, or imagery to determine what works best to keep your visitors on the page for a longer period of time. By testing one variable at a time, you’ll be able to understand what elements on the page aren’t working and display the opposite. ( Source; PostHog)

Free A/B Testing Tools.

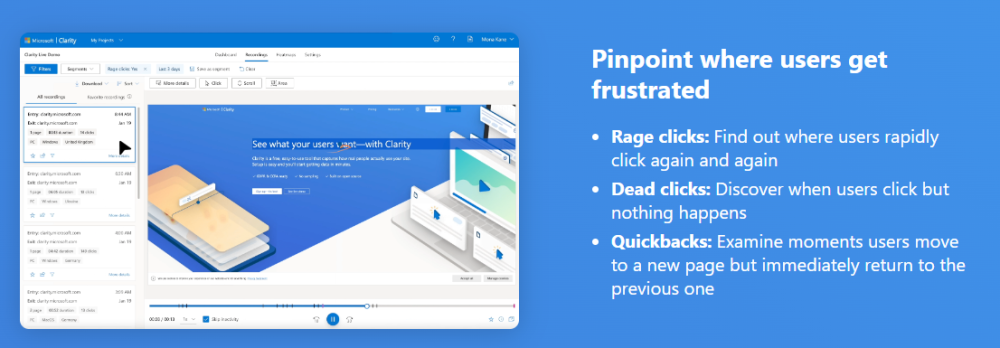

Microsoft Clarity

Microsoft Clarity provides insights into how users interact with your website through session replays, heatmaps, and user behavior analytics. It helps you understand user engagement, identify usability issues, and optimize the overall user experience.

Microsoft Clarity provides insights into how users interact with your website through session replays, heatmaps, and user behavior analytics. It helps you understand user engagement, identify usability issues, and optimize the overall user experience.

Clarity is a free tool that offers everything upfront. There are no paid upgrades. It s all free.

Crazy Egg

Crazy Egg offers website optimization tools such as heatmaps, scrollmaps, and user recordings to help businesses understand user behavior, improve website design, and increase conversions. It provides valuable insights into how visitors interact with a website, allowing for data-driven decision-making and enhanced user experience.

You can start with a 30 day free trial. Please contact directly for a firm price. What I like about Crazy Egg is that it is very easy to use.

Zoho Page Sense

Zoho PageSense offers website optimization features including A/B testing, heatmaps, and funnel analysis to help businesses enhance user experience, increase conversions, and make data-driven decisions. It provides valuable insights into user behavior, allowing for targeted improvements to maximize website performance and achieve business goals.

You can start with a 15 day free trial ( No credit card needed ) and then US $12.00 per month paid annually.

There is quite a range of businesses that provide this service. For example, Unbounce, Omni Convert, VWO and Optimizely just to add a few more. The oes I have shown are ones that I have used and recommend, either because hey are free or because it is easy to use and cost efective.

Conclusion

You must try it and use the results to boost your websites success. Knowledge about your business and posts and how to refine them will drive your business to the nextlevel. I wish you the best of luck.

P.S. I derive no commission from any of these platforms to keep my advice neutral.

Steve

Some links on this site may be affiliate links, and if you purchase something through these links, I will make a commission on them.

There will be no extra cost to you and, you could actually save money. Read our full affiliate disclosure here.

(

(